Development

Worker design

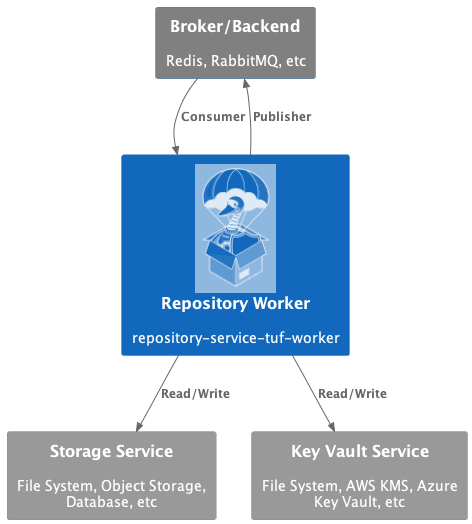

Context level

The repository-service-tuf-worker, in the context perspective, is a Consumer and

Publisher from the Broker that receives tasks to perform in the

TUF Metadata Repository. The Metadata Repository is stored using a

Repository Storage Service that reads/writes this data. For signing

this Metadata, the repository-service-tuf-worker uses the Key Vault Repository

Service to access the online keys.

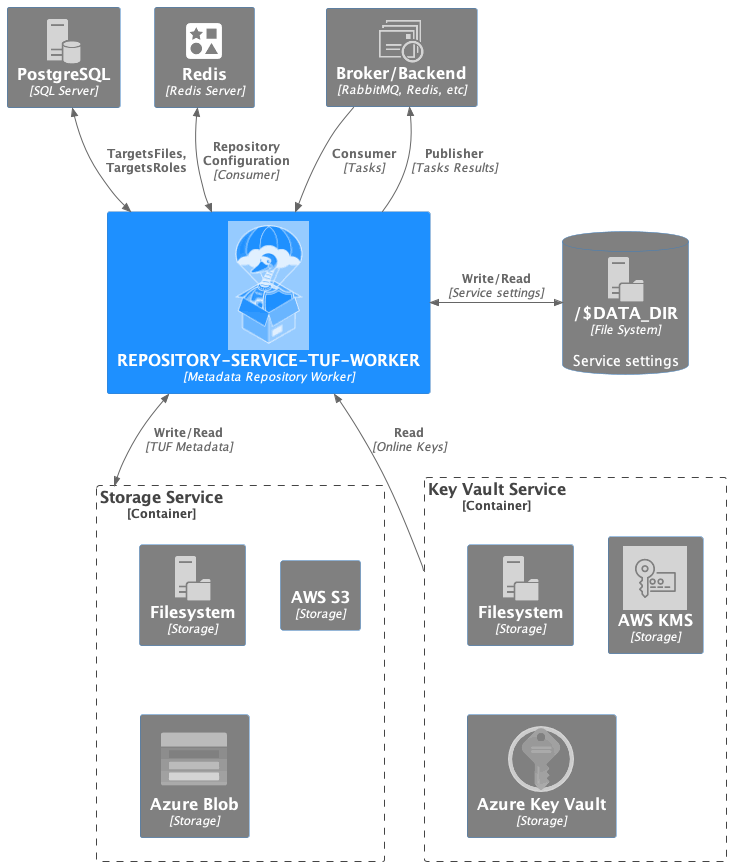

Container level

The repository-service-tuf-worker, in the container perspective, is a Metadata

Repository worker that performs actions to the TUF Metadata.

It will consume tasks from the Broker server and execute the task actions in

the Metadata Repository using the Storage Service to handle the TUF

Metadata. For signing the Metadata, it will use the Key Vault Service to

manage the keys. After executing any action, repository-service-tuf-api publishes to

the Broker.

The repository-service-tuf-worker implements the services Storage Service and the

Key Vault Service to support different technologies for storage and key

vault storage.

- Current supported Storage Services types:

LocalStorage (File System)

S3Storage (AWS S3 Object Storage – to be implemented)

- Current supported Key Vault Service types:

LocalKeyVault (File System)

KMS (AWS KMS – to be implemented)

The repository-service-tuf-worker stores configuration settings. These are the

Worker Settings.

The repository-service-tuf-worker``also uses the **Repository Settings**, from

``RSTUF_REDIS_SERVER.

Worker Settings: are related to the operational configurations to run the

repository-service-tuf-worker such as worker id, Broker, type of Storage, Key

Vault services and their sub-configurations, etc.

Repository Settings are given by repository-service-tuf-api and

are stored in RSTUF_REDIS_SERVER to run routine tasks such as bumping

snapshot and timestamp metadata, etc.

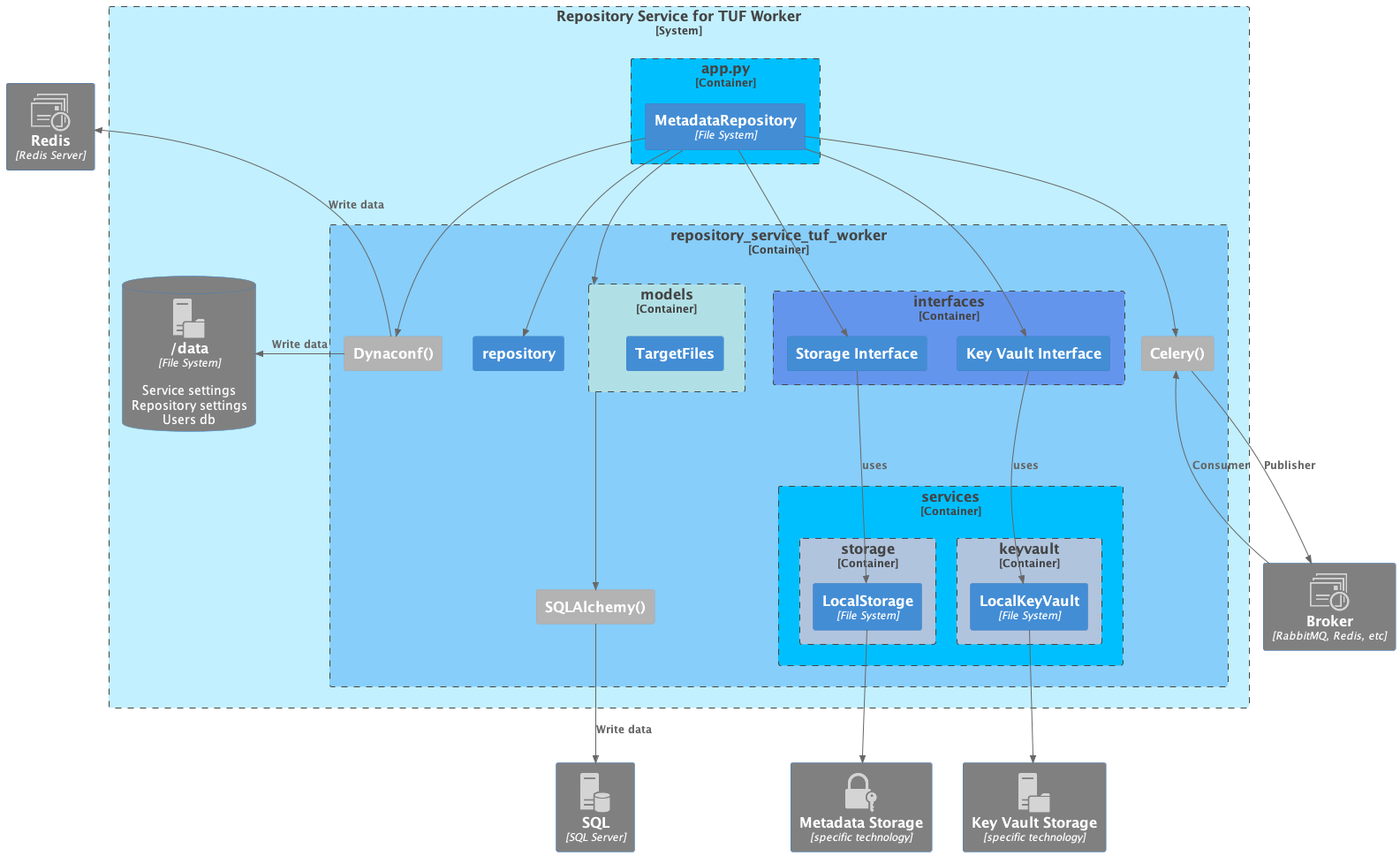

Component level

Component Specific

Distributed Asynchronous Signing

This describes the Distributed Asynchronous Signing with other specific TUF Metadata processes.

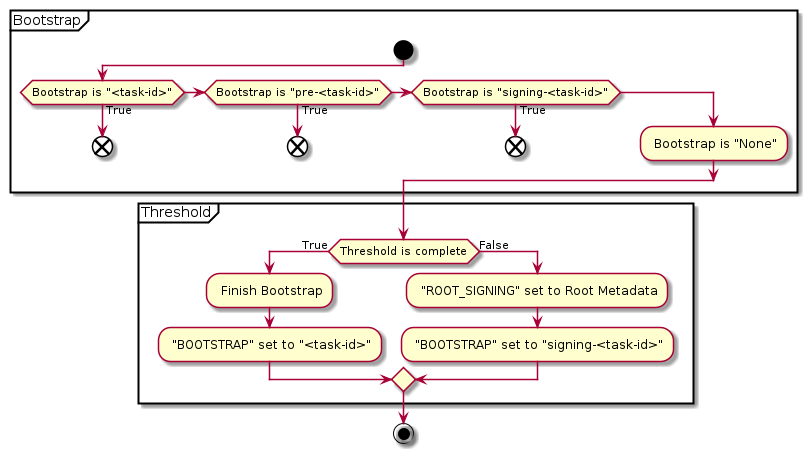

Bootstrap

if the included root has enough signatures, task is finalized right away

otherwise, task is put in pending state and half-signed root is cached (RSTUF Setting:

ROOT_SIGNING)

Note

See BOOTSTRAP and ROOT_SIGNING states reference in

Architecture Design: TUF Repository Settings.

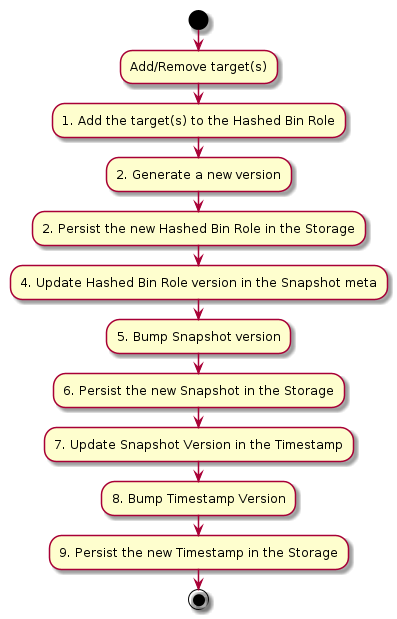

Adding/Removing targets

As mentioned at the container level, the domain of repository-service-tuf-worker

(Repository Worker) is managing the TUF Repository Metadata.

The Repository Worker has an Metadata Repository (MetadataRepository) implementation

using python-tuf.

The repository implementation has different methods such as adding new targets, removing targets, bumping role metadata versions (ex: Snapshot and Timestamp), etc.

The Repository Worker handles everything as a task. To handle the tasks, the Repository Worker uses Celery as Task Manager.

We have two types of tasks:

First are tasks that Repository Work consumes from the Broker Server are tasks published by the Repository Service for TUF API in the

repository_metadataqueue, sent by an API User.Second are tasks that Repository Work generates in the queue

rstuf_internals. Those are internal tasks for the Repository Worker maintenance.

The tasks are defined in the repository-service-tuf-worker/app.py`, and uses Celery

Beat as

scheduler.

The repository Worker has two maintenance tasks:

Bump Roles that contain online keys (“Snapshot”, “Timestamp” and Hahsed Bins (“bins-XX”).

Publish the new Hashed Bins Target Roles (“bins-XX”) with new/removed targets.

About Bump Roles (bump_online_roles) that contain online keys is easy.

These roles have short expiration (defined during repository configuration) and

must be “bumped” frequently. The implementation in the RepositoryMetadata

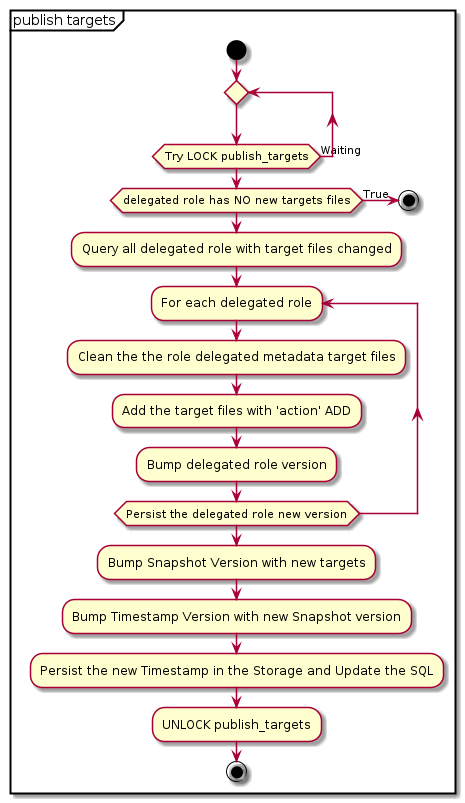

Publish the new Hashed Bins Target Roles (publish_targets) is part of the

solution for the Repository Worker scalability, Issue 17.

To understand more, every time the API sends a task to add a new target, the Hashed Bins Roles must be changed to add the new target(s), followed by a new Snapshot and Timestamp versions.

To give more flexibility to multiple Repository Workers to handle multiple tasks and not wait until the entiry flow is done, per each task, we split it.

We use the ‘waiting time’ to alternate between tasks.

Note

This is valid flow for the Repository Metadata Methods add_targets and remove_targets

Repository Worker adds/removes the target to the SQL Database.

It means the multiple Repository Workers can write multiple Targets

(TargetFiles) simultaneously from various tasks in the Database.

The Publish the new Hashed Bins Target Roles is a synchronization between the SQL Database and the Hashed Bins Target Roles in the Backend Storage (i.e. JSON files in the filestytem)

When a task finishes, it sends a task the Publish the new Hashed Bins Target Roles .

Every minute, the routine task Publish the new Hashed Bins Target Roles also runs.

The task will continue running and waiting until all the targets are persisted to the Repository Metadata backend.

The Publish the new Hashed Bins Target Roles task runs once per time to using locks 1 . It will will do:

- 1

Lock is used Celery task. It is used to ensure a task is only executed one at a time . I avoid that two tasks write the same metadata, causing a race condition.

RSTUF Worker Backend Services Development

Storage

The default RSTUF Worker source code is configured to use LocalStorage.

Initiate the local development environment

make run-dev

AWSS3

Initiate the aws development environment

make run-dev DC=aws

KeyVault

The default RSTUF Worker source code is configured to use LocalKeyVault.

Initiate the local development environment

make run-dev

Important issues/problems

Implementation

- repository_service_tuf_worker package

- repository_service_tuf_worker.models package

- repository_service_tuf_worker.models.targets package

- repository_service_tuf_worker.services package

- repository_service_tuf_worker.services.storage package

- repository_service_tuf_worker.services.keyvault package

- repository_service_tuf_worker